An Introduction to Artificial Intelligence

What is AI?

Artificial intelligence (AI) is a technology that mimics human intelligence, allowing computer applications to learn from experience via iterative processing and algorithmic training.

To put it simply, AI is trying to make computers think and act like humans. The ideal characteristic of artificial intelligence is its ability to rationalize and take actions that have the best chance of achieving a specific goal.

Have you used that smartphone in your pocket to take pictures? Try taking a picture of a friend with it right now. Do you see how it's able to adjust the contrast and brightness, and perhaps draw rectangles around the faces of people in the picture?

The camera hasn't ever seen your friend before, and yet it's able to recognize a human face. That's AI at work. You've been using it all along, without realizing it.

The concept of what defines AI has changed over time, but at the core, there has always been the idea of building machines that are capable of thinking like humans and this article tries to put AI within the grasping reach of absolute beginners.

AI is accomplished by studying the patterns of the human brain and by analyzing the cognitive process. It emphasizes three cognitive skills—learning, reasoning, and self-correction—skills that the human brain possesses to one degree or another.

Learning: The acquisition of information and the rules needed to use that information. The rules, which are called algorithms, provide computing devices with step-by-step instructions for how to complete a specific task.

Reasoning: Using the algorithm to reach the desired outcome.

Self-Correction: The process of continually fine-tuning AI algorithms and ensuring that they offer the most accurate results they can.

The AI field draws upon computer science, mathematics, psychology, linguistics, philosophy, neuroscience, artificial psychology, and many others.

It requires a foundation of specialized hardware and software for writing and training machine learning algorithms. No one programming language is synonymous with AI, but a few, including Python, R and Java, are popular.

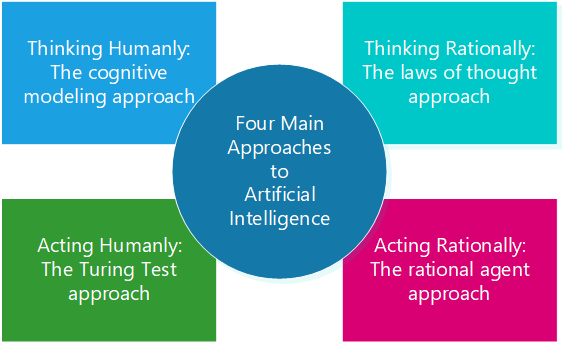

Approaches of AI

There are four approaches to AI and they are as follows:

Acting humanly (The Turing Test approach): This approach was designed by Alan Turing. The ideology behind this approach is that a computer passes the test, if a human interrogator, after asking some written questions, cannot identify whether the written responses come from a human or from a computer.

Thinking humanly (The cognitive modeling approach): The idea behind this approach is to determine whether the computer thinks like a human.

Thinking rationally (The "laws of thought" approach): The idea behind this approach is to determine whether the computer thinks rationally i.e. with logical reasoning.

Acting rationally (The rational agent approach): The idea behind this approach is to determine whether the computer acts rationally i.e. with logical reasoning.

Why is AI Needed?

1. To perform human functions, such as planning, reasoning, problem-solving, communication, and perception, more effectively, efficiently, and at a lower cost.

2. To speed up, improve precision, and increase the efficacy of human endeavors.

3. To predict fraudulent transactions, implement rapid and accurate credit scoring, and automate labour-intensive tasks in data administration.

4. To create expert systems that exhibit intelligent behavior with the capability to learn, demonstrate, explain, and advise its users.

5. To help machines find solutions to complex problems like humans do and apply them as algorithms in a computer-friendly manner.

History of AI

Artificial intelligence dates back to the late 1940s when computer pioneers like Alan Turing and John von Neumann first started examining how machines could "think."

One method for determining whether a computer has intelligence was devised by the British mathematician and World War II code-breaker Alan Turing. The Turing Test focused on a computer's ability to fool interrogators into believing its responses to their questions were made by a human being.

In 1956, the phrase "artificial intelligence" was coined by John McCarthy at the Dartmouth Summer Research Project on Artificial Intelligence.

A significant milestone in AI occurred in the same year when researchers proved that a machine could solve any problem if it were allowed to use an unlimited amount of memory. The result was a program called the General Problem Solver (GPS).

The early years of the 21st century were a period of significant progress in artificial intelligence. The first major advance was the development of the self-learning neural network.

By 2001, its performance had already surpassed human beings in many specific areas, such as object classification and machine translation. There had been an increasing emphasis on using deep neural networks for computer vision tasks, such as object recognition and scene understanding.

Finally, there was also a growing interest in using these same tools for speech recognition tasks like automatic speech recognition (ASR) and speaker identification (SID).

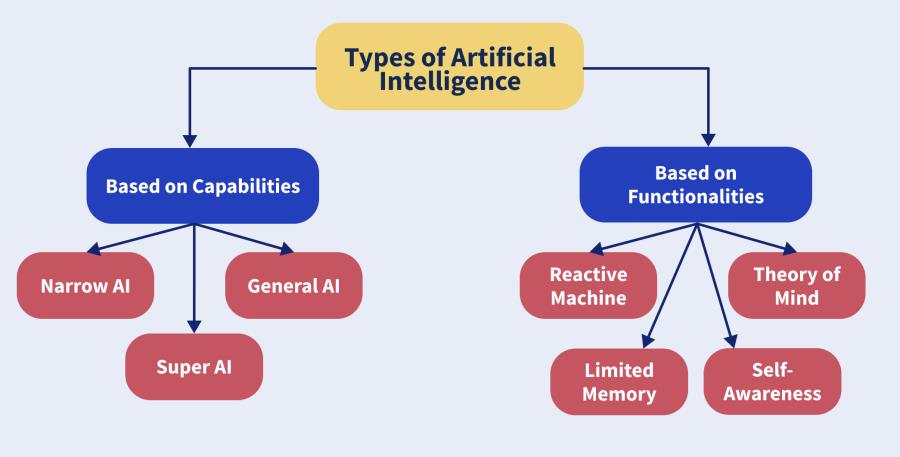

Types of AI

AI can be categorized into four types.

Type 1: Reactive machines. These AI systems have no memory and are task-specific. An example is Deep Blue, the IBM chess program that beat Garry Kasparov in the 1990s. Deep Blue can identify pieces on the chessboard and make predictions, but because it has no memory, it cannot use past experiences to inform future ones.

Type 2: Limited memory. These AI systems have memory, so they can use past experiences to inform future decisions. Some of the decision-making functions in self-driving cars are designed this way. Limited memory AI is more complex and presents greater possibilities than reactive machines.

Type 3: Theory of mind. Theory of mind is a psychology term. When applied to AI, it means that the system would have the social intelligence to understand emotions. This type of AI will be able to infer human intentions and predict behaviour, a necessary skill for AI systems to become integral members of human teams. We have not yet achieved the technological and scientific capabilities necessary to reach this next level of AI.

Type 4: Self-awareness. This kind of AI possesses human-level consciousness and understands its own existence in the world, as well as the presence and emotional state of others. This type of AI does not exist yet.

There are three ways to classify artificial intelligence, based on its capabilities. Rather than types of artificial intelligence, these are stages through which AI can evolve — and only one of them is actually possible right now.

1. Narrow AI: Narrow AI, also called Weak AI, is what we see all around us in computers today -- intelligent systems that have been taught or have learned how to carry out specific tasks without being explicitly programmed how to do so. This type of machine intelligence is evident in the speech and language recognition of the Siri virtual assistant on the Apple iPhone, in the vision-recognition systems on self-driving cars, or in the recommendation engines that suggest products you might like based on what you bought in the past. Unlike humans, these systems can only learn or be taught how to do defined tasks, which is why they are called narrow AI.

2. Artificial General Intelligence (AGI): AGI, also called Strong AI, is the type of adaptable intellect found in humans, a flexible form of intelligence capable of learning how to carry out vastly different tasks and applying that intelligence to solve any problem. It is the kind of AI we see in movies — like the robots from Westworld or the character Data from Star Trek: The Next Generation.

3. Artificial Super Intelligence (ASI): We are entering into the science-fiction colony, but ASI is likely to be the reasoning development from AGI. If ASI will be created then it will be able to cross all human abilities. ASI will not only be able to replicate the complex emotion and intelligence of human beings but surpass them in every way.

How Does Artificial Intelligence Work?

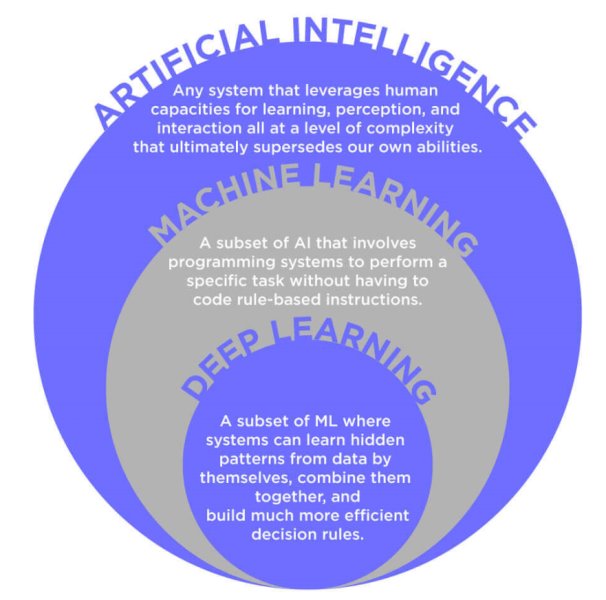

The main method of teaching a machine, intelligence, or in other words, teaching a machine how to gather and process information is a concept known as machine learning.

Machine Learning

Machine learning, a subset of AI, is where a computer system learns how to perform a task rather than being programmed how to do so. This is the science of getting a computer to act without programming. To learn, these systems are fed huge amounts of data, which they then use to learn how to carry out a specific task, such as understanding speech or captioning a photograph.

Machine learning is achieved by using complex algorithms that discover patterns and generate insights from the data they are exposed to.

Computers are good at following processes, i.e., sequences of steps to execute a task. If we give a computer steps to execute a task, it should easily be able to complete it. The steps are nothing but algorithms. An algorithm can be as simple as printing two numbers or as difficult as predicting who will win elections in the coming year!

So, how can we accomplish this?

Let’s take the example of predicting the weather forecast for 2020.

First of all, what we need is a lot of data! Let’s take the data from 2006 to 2019. Now, we will divide this data into an 80:20 ratio. 80 percent of the data is going to be our labelled data, and the rest 20 percent will be our test data. Thus, we have the output for the entire 100 percent of the data acquired from 2006 to 2019.

What happens once we collect the data? We will feed the labelled data (train data), i.e., 80 percent of the data, into the machine. Here, the algorithm is learning from the data which has been fed into it.

Next, we need to test the algorithm. Here, we feed the test data, i.e., the remaining 20 percent of the data, into the machine. The machine gives us the output. Now, we cross-verify the output given by the machine with the actual output of the data and check for its accuracy.

While checking for accuracy, if we are not satisfied with the model, we tweak the algorithm to give the precise output or at least somewhere close to the actual output. Once we are satisfied with the model, we then feed new data to the model so that it can predict the weather forecast for the year 2020.

With more and more sets of data being fed into the system, the output becomes more and more precise. The quality and size of this dataset are important for building a system to carry out its designated task accurately.

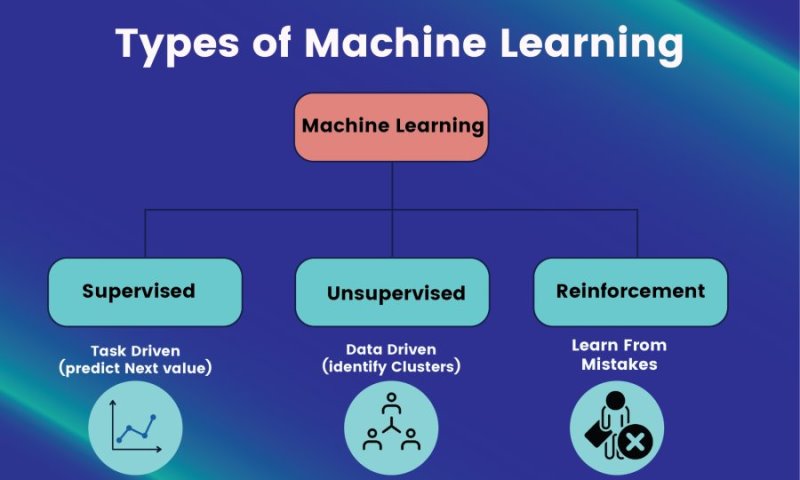

There are three types of machine learning algorithms:

Supervised learning. Data sets are labelled so that patterns can be detected and used to label new data sets.

A common technique for teaching AI systems is by training them using many labelled examples. These machine-learning systems are fed huge amounts of data, which have been annotated to highlight the features of interest.

These might be photos labelled to indicate whether they contain a dog or written sentences that have footnotes to indicate whether the word 'bass' relates to music or a fish. Once trained, the system can then apply these labels to new data, for example, to a dog in a photo that's just been uploaded. This process of teaching a machine by example is called supervised learning.

Unsupervised learning. Data sets aren't labelled and are sorted according to similarities or differences.

In contrast, unsupervised learning uses a different approach, where algorithms try to identify patterns in data, looking for similarities that can be used to categorize that data.

An example might be clustering together fruits that weigh a similar amount or cars with a similar engine size. The algorithm isn't set up in advance to pick out specific types of data; it simply looks for data that its similarities can group, for example, Google News grouping together stories on similar topics each day.

Reinforcement learning Reinforcement learning is a behavioural learning model. The algorithm receives feedback from the analysis of the data so the user is guided to the best outcome.

Reinforcement learning differs from other types of supervised learning because the system isn’t trained with the sample data set. Rather, the system learns through trial and error. Therefore, a sequence of successful decisions will result in the process being "reinforced" because it best solves the problem at hand.

One of the most common applications of reinforcement learning is in robotics or game playing. Take the example of the need to train a robot to navigate a set of stairs. The robot changes its approach to navigating the terrain based on the outcome of its actions. When the robot falls, the data is recalibrated so the steps are navigated differently until the robot is trained by trial and error to understand how to climb stairs. In other words, the robot learns based on a successful sequence of actions.

The learning algorithm has to be able to discover an association between the goal of climbing stairs successfully without falling and the sequence of events that lead to the outcome.

Deep Learning

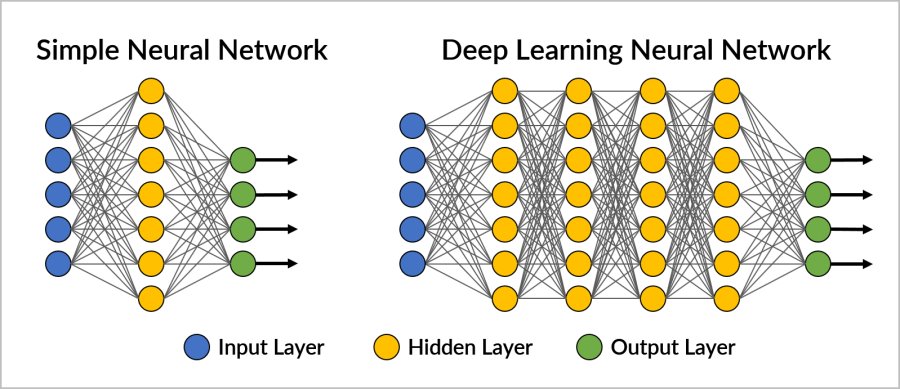

Deep learning is a subset of machine learning that incorporates neural networks in successive layers, stacked on top of each other, in order to learn from data in an iterative manner.

The structure and functioning of neural networks are very loosely based on the connections between neurons in the brain. Neural networks are made up of interconnected layers of algorithms that feed data into each other.

They can be trained to carry out specific tasks by modifying the importance attributed to data as it passes between these layers. During the training of these neural networks, the weights attached to data as it passes between the layers will continue to vary until the output from the neural network is very close to what is desired.

At that point, the network will have 'learned' how to carry out a particular task. The desired output could be anything from correctly labelling fruit in an image to predicting when an elevator might fail based on its sensor data.

"Deep" in deep learning refers to a neural network that consists of three or more layers: an input layer, one or many hidden layers, and an output layer.

Input Layer Data is ingested through the input layer.

Hidden Layer The hidden layers are responsible for all the mathematical computations or feature extraction on our inputs. The more hidden layers we have, the more accurate the predicted output will be.

Output Layer The Output Layer is the last layer and it will provide us with results from the hidden layer.

Deep learning is especially useful when you’re trying to learn patterns from unstructured data. Deep learning — complex neural networks — are designed to emulate how the human brain works so computers can be trained to deal with abstractions and problems that are poorly defined.

Self-driving cars are a recognizable example of deep learning since they use deep neural networks to detect objects around them, determine their distance from other cars, identify traffic signals and much more.

Why is Artificial Intelligence Important?

AI is important because it gives enterprises insights into their operations that they may not have been aware of previously, and in some cases, AI can perform tasks better than humans. This has helped fuel an explosion in efficiency and opened the door to entirely new business opportunities for some larger enterprises.

Prior to the current wave of AI, it would have been hard to imagine using computer software to connect riders to taxis, but today Uber has become one of the largest companies in the world by doing just that.

It utilizes sophisticated machine learning algorithms to predict when people are likely to need rides in certain areas, which helps proactively get drivers on the road before they're needed.

Advantages

Good at detail-oriented jobs

Reduced time for data-heavy tasks

Delivers consistent results

Reduces human error

Disadvantages

It’s costly to implement

Requires deep technical expertise

A limited supply of qualified workers to build AI tools

It only knows what it's been shown

Concerns Surrounding the Advancement and Usage of AI

The key thing to remember about AI is that it learns from data. The model and algorithm underneath are only as good as the data put into them. This means that data availability, bias, improper labelling, and privacy issues can all significantly impact the performance of an AI model.

Data availability and quality are critical for training an AI system. Some of the biggest concerns surrounding AI today relate to potentially biased datasets that may produce unsatisfactory results or exacerbate gender/racial biases within AI systems.

When we research different types of machine learning models, we find that certain models are more susceptible to bias than others. For example, when using deep learning models (e.g., neural networks), the training process can introduce bias into the model if a biased dataset is used during training.

Another source of concern is accountability. For example, should we hold corporations responsible for the actions of intelligent machines they develop? Or should we consider holding machine developers accountable for their work?

Since its beginning, artificial intelligence has come under scrutiny from scientists and the public alike. One common theme is the idea that machines will become so highly developed that humans will not be able to keep up and they will take off on their own, redesigning themselves at an exponential rate.

Another contentious issue is how it may affect human employment. With many industries looking to automate certain jobs through the use of intelligent machinery, there is a concern that people would be pushed out of the workforce. It’s worth noting, however, that the artificial intelligence industry stands to create jobs too.

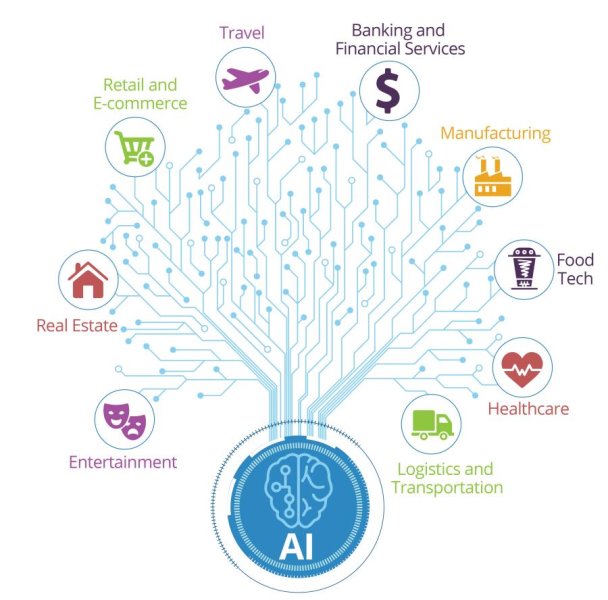

AI Applications

Applications of AI include Natural Language Processing, Gaming, Speech Recognition, Vision Systems, Healthcare, Automotive, etc. Below are some of the most common examples:

Natural Language Processing (NLP): NLP gives machines the ability to read and understand human language. Personal assistants such as Amazon's Alexa and Apple's Siri incorporate speech recognition technology into their systems. You ask the assistant a question, and it answers it for you.

Computer Vision: This AI technology enables computers and systems to derive meaningful information from digital images, videos and other visual inputs, and based on those inputs, it can take action. This ability to provide recommendations distinguishes it from image recognition tasks. Powered by convolutional neural networks, computer vision has applications within photo tagging in social media, radiology imaging in healthcare, and self-driving cars within the automotive industry.

Robotics: This field of engineering focuses on the design and manufacturing of robots. Robots are often used to perform tasks that are difficult for humans to perform or perform consistently. For example, robots are used in assembly lines for car production or by NASA to move large objects in space. Researchers are also using machine learning to build robots that can interact in social settings.

Recommendation Engines: Using past consumption behaviour data, AI algorithms can help to discover data trends that can be used to develop more effective cross-selling strategies. This is used to make relevant add-on recommendations to customers during the checkout process.

Customer Service: Online chatbots are replacing human agents along the customer journey. They answer frequently asked questions (FAQs) around topics, like shipping, or provide personalized advice, cross-selling products or suggesting sizes for users, changing the way we think about customer engagement across websites and social media platforms. Examples include messaging bots on e-commerce sites with virtual agents, messaging apps, such as Slack and Facebook Messenger, and tasks usually done by virtual assistants and voice assistants.

Self-Driving Cars: Autonomous vehicles use a combination of computer vision, image recognition and deep learning to build automated skills at piloting a vehicle while staying in a given lane and avoiding unexpected obstructions, such as pedestrians.

Automation: When paired with AI technologies, automation tools can expand the volume and types of tasks performed. An example is robotic process automation (RPA), a type of software that automates repetitive, rules-based data processing tasks traditionally done by humans.

Machines and computers affect how we live and work. Top companies are continually rolling out revolutionary changes in how we interact with machine-learning technology.

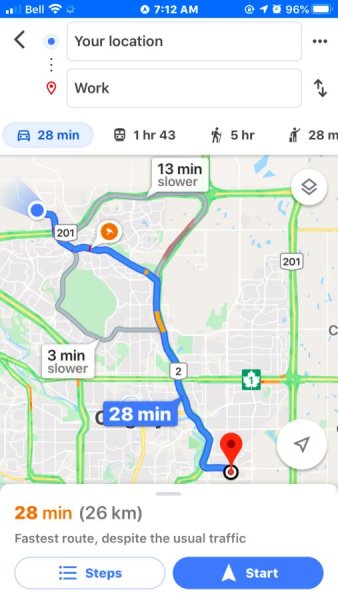

We all know that while traveling, Google Maps can examine the rate of movement of traffic at any given time. This is possible only with the help of AI. Maps can easily integrate with user-reported road incidents like jams and accidents. Maps use large amounts of data that are examined by their algorithms.

Netflix uses past client information to suggest what film a client should see straight away, making the client trapped onto the stage and increment watch time.

Watson, a question-answering computer system developed by IBM, is designed for use in the medical field. Watson suggests various kinds of treatment for patients based on their medical history and this has proven to be very useful.

Fully self-driving cars are now a reality. Tesla is the first company to make a car with all of the sensors, cameras, and software needed for a computer to drive itself from start to finish.

Gmail and other email providers use AI for spam detection, which looks at the subject line and the text of an email and decides if it's junk.

AI is a comprehensive technology that is being applied in almost every industry. Here are a few examples:

AI in Healthcare: AI is helping doctors to diagnose diseases by gathering data from health records, scanning reports, and medical images. This helps doctors to make faster diagnoses and guide the patient for further tests or prescribe medications.

AI in Retail: In retail, AI does everything from stock management to customer service chatbots. As a result, many businesses are taking advantage of AI to improve productivity, efficiency, and accuracy. Chatbots have been incorporated into websites to provide immediate service to customers.

AI in Education: AI can automate grading, giving educators more time. It can assess students and adapt to their needs, helping them work at their own pace. AI tutors can provide additional support to students, ensuring they stay on track. And it could change where and how students learn, perhaps even replacing some teachers.

AI in Finance: AI in personal finance applications, such as Intuit Mint or TurboTax, collect personal data and provide financial advice. Designed to optimize stock portfolios, AI-driven high-frequency trading platforms make thousands or even millions of trades per day without human intervention.

AI in Law: The discovery process -- sifting through documents -- in law is often overwhelming for humans. Using AI to help automate the legal industry's labor-intensive processes is saving time and improving client service.

AI in Manufacturing: Manufacturing has been at the forefront of incorporating robots into the workflow.

AI in Banking: Banks are successfully employing chatbots to make their customers aware of services and offerings and to handle transactions that don't require human intervention. Banking organizations are also using AI to improve their decision-making for loans, set credit limits and identify investment opportunities. AI is used to detect and flag activity in banking and finance such as unusual debit card usage and large account deposits—all of which help a bank's fraud department.

AI in Transportation: In addition to AI's fundamental role in operating autonomous vehicles, AI technologies are used in transportation to manage traffic, predict flight delays, and make ocean shipping safer and more efficient.

AI in Security: Organizations use machine learning in security information and event management (SIEM) software and related areas to detect anomalies and identify suspicious activities that indicate threats. By analyzing data and using logic to identify similarities to known malicious code, AI can provide alerts to new and emerging attacks much sooner than human employees and previous technology iterations. The maturing technology is playing a big role in helping organizations fight off cyber attacks.

AI is today’s dominant technology and will continue to be a significant factor in various industries for years to come.

The Future of AI

In the coming years, most industries will see a significant transformation due to new-age technologies like cloud computing, the Internet of Things (IoT), and Big Data Analytics.

The recent advancements in AI have led to the emergence of a new type of system called Generative Adversarial Networks (GANs), which generate realistic images, text, or audio. GANs are just one example of how AI is changing our lives.

To build an engaging metaverse that appeals to millions of users who want to learn, create, and inhabit virtual worlds, AI must be used to enable realistic simulations of the real world. People need to feel immersed in the environments they participate in. AI is helping to achieve this reality by making objects look more realistic and enabling computer vision so users can interact with simulated objects using their body movements.

AI is real, and it's within your reach. Like anything, you get good at it with practice.

Want to jump on the AI bandwagon?

Explore now and learn how you use AI to solve real-world problems.